Recently, I had to hunt down what might politely be termed “discussion” around the polls for Rebekah’s excellent piece showing why polls taken too far out from an election don’t predict results very well.

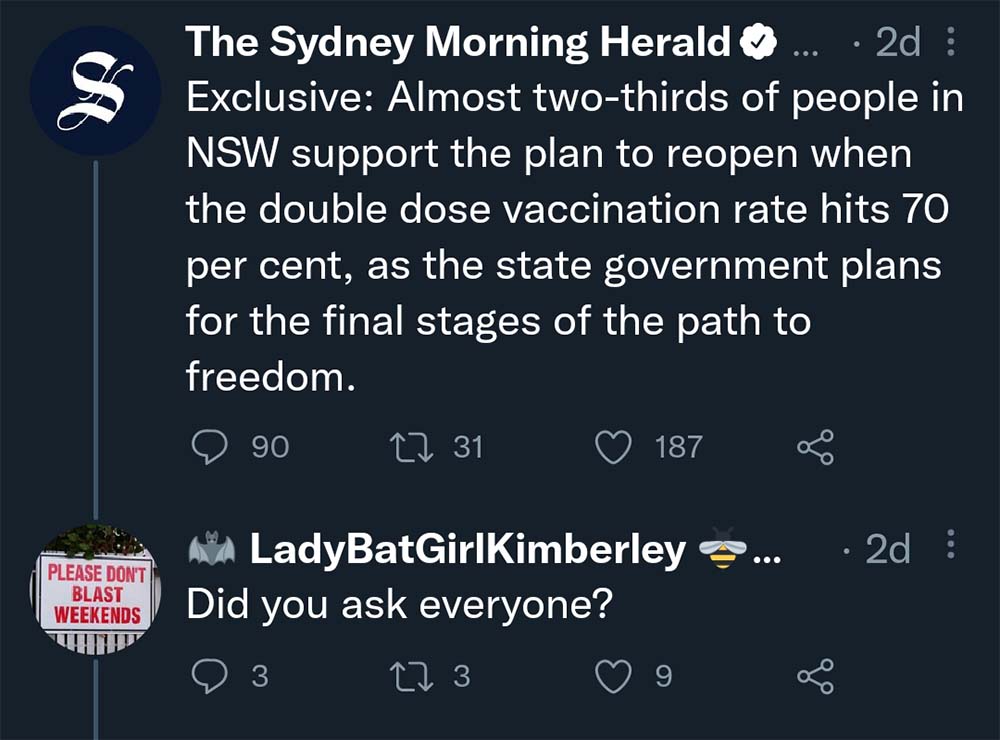

Let’s leave the delightful individuals who call intending-voters for the other side “stupid”, “idiot” etc to one side for now (preferably on the front porch for weekly collection). I’m more interested in focusing on people who reject the polls for these types of reasons:

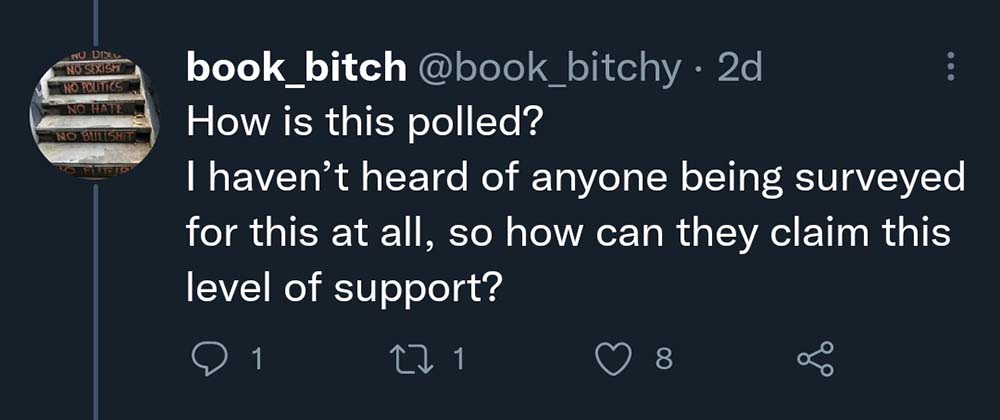

Or the other kind of polls-can’t-be-right-because commentary – the ad hominem:

Firstly, Newspoll has been one of the least biased pollsters in Australian elections. Secondly, at the 2019 Australian federal election, the final Newspoll correctly saw the Coalition leading 51-49 in NSW, so no idea where this comes from. Can we at least ensure that the points we cite in favour of our tweets are correct even if they’re misleading as usual?

Frankly, I find that such hypotheses about intentional bias in public polling don’t make any sense. From the evidence on bias in public Australian polling, to the monetary incentives surrounding polling, to what we know of human psychology, there is no reason why polls and the major media sources commissioning them would intentionally bias their results for some political purpose, or even allow an unintentional bias to mess with their results.

(Push polls, internal party polling, and polls conducted by a known lobbying group are of course a different matter altogether. Following Dr Bonham’s definitions and terminology, I refer to these as “commissioned polling”.)

Here’s why.

Firstly, there is no evidence of any systematic skew in Australian polling.

I covered this in much more depth in my piece analysing the possibility of a shy Tory effect in Australian polls:

Is There A Shy Tory Effect In Australian Polling?

Examining whether Australian polls tend to under-estimate support for conservative parties and causes, as well as determining if any effects are due to “shy Tories” or to sampling issues.

But, briefly, there is no evidence of any kind of skew against either the Coalition or One Nation in Australian polling. Graphically, here’s a swarm chart of every polling error in the Coalition’s vote since the 1980s (each dot represents one polling error):

I’m of the opinion that this alone should dismantle any notion of bias in Australian polling – there is no systematic bias over time to either side! If public polls were biased in some form, why wouldn’t it show up in a simple average of all polling errors?

But let’s indulge the rigged-polling-theorist mindset here, and engage in some motivated reasoning.

What if Murdoch et al only get their pollsters to introduce an intentional bias into their polls at a small number of elections? Or intentionally introduce biases in the opposite direction at some elections? What discourages them from doing so?

Secondly, pollsters are incentivised to make their polls as accurate as possible

Have you heard the Tragedy of Roy Morgan and the Bulletin?

I thought not.

It’s not a story the biased-polling theorists would tell you.

Basically, the Australian pollster Roy Morgan used to be the house pollster for the now-defunct magazine the Bulletin. However, at the 2001 Australian federal election, Morgan published a poll showing Labor ahead 54.5-45.5 on the two-party-preferred vote (2pp), standing by their numbers despite Newspoll showing Labor behind 47-53.

Unfortunately for them, this was the Tampa and 9/11 election, where John Howard’s Coalition proceeded to beat Labor 51-49. After the fiasco, Morgan lost their contract as the Bulletin’s in-house pollster, with their 5.5% final polling error being the largest I’ve found so far in Australian federal final polling history.

(Something similar happened to Ipsos after the 2019 Australian polling error)

When pollsters have stuck their necks out and failed, they have taken fire for it. In some cases, contracts have been ended or not renewed by major media sources. In others, pollsters have had to expend resources to develop new methodologies (e.g. Newspoll abandoning robo-polling after the 2019 error). Furthermore, polling errors at elections are often publicized and sensationalized by media outlets, damaging trust in the entire opinion polling and market research industry (and especially in the pollsters who stuffed up).

To finish off this section, I’d like to pose two questions, one of which I’ve brought up before on Twitter:

Let’s say you’re a pollster. Historically, your polling error on a left/right basis has been -1 to +3%. Do you:

- Do nothing: risk being embarrassed, losing your contract, and turning into one of those pollsters who no one trusts or hires because “Oh, aren’t they biased?”

- Get to the bottom of this and adopt a model which shifts your topline result by -1%. Either your polls are +/- 2% of the actual result, making them one of the most accurate in the business, or something goes wrong and you’re back where you started.

Now, let’s say you’ve become a gold-standard pollster, with contracts all over the world. Your major media source comes up to you and asks you to find out how, ah, popular the government is with the people in your latest poll, wink wink nudge nudge. Do you:

- Agree to it, and run the risk of being humiliated, wrecking your precious hard-earned corporate reputation, as well as increasing the risk that clients in other industries and/or countries will decline to renew contracts etc., or;

- Advise them that their oh-so-informative preambles and interesting question design are likely to skew the results. If they really want to, they can commission a separate poll dealing with this but it should not be linked to your public, voting-intention polling (and not under the same brand if possible).

Thirdly, poll analysts are incentivised to find problems with the polling

Did you hear about how accurate the polls were at the 2016 US presidential election? The two-party (Clinton/(Clinton + Trump)) error on the final polls was just 0.4% – 1.2% (depending on how you averaged them), which is lower than the error in the 2012 US presidential election and one of the smallest polling errors in US polling history. In conclusion, the polls are fine, now let’s move onto whatever it was that orange man in the White House did today.

Wait, no? You didn’t read any articles written that way after the 2016 election? You heard about election forecasters coming under attack after the election? How your friends and family swore to never trust them again?

Great! Now explain to me why you’d think that any problems with the polls would be ignored by the people who write about and analyse them.

Snark aside, those who write about and analyse the polls in the media are incentivised to find problems and bring them to light. Conflicts, disasters and accusations sell, “scientific industry gets it right again” doesn’t. Witness how a single, misleading line in the post-2019 Australian polling review spawned newspaper headlines claiming that the polls systematically over-estimated Labor, even though a review of state polling during the same period showed no bias to Labor. In the UK, a post-election review which originated the concept of the “shy Tory” has managed to get the idea repeated every time the conservative side has over-performed its polls – even when other, more mundane factors are more likely at play.

If a journalist could demonstrate that a pollster was systematically biased to one side, they have every reason to expose the bias, especially if it originates from a pollster employed by a rival news network!

More importantly, those who make polling-based forecasts are extremely incentivised to find problems with the polls. Election forecasting as a whole took a big hit after the 2016 US presidential election; even forecasters who correctly projected a tight race (e.g. Nate Silver’s FiveThirtyEight) were panned alongside others who claimed Clinton was a 90+% favourite to win. If there truly was a systematic bias in the polls, election forecasters have every reputational, monetary and occasionally bug-eating-related incentive to find it, and adjust for it in their models.

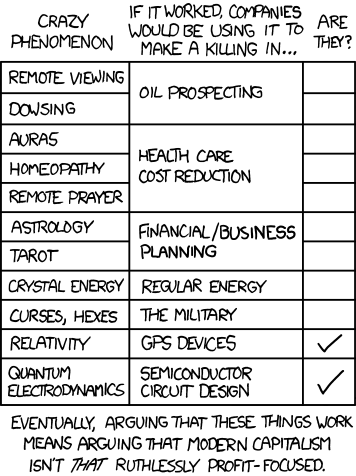

There’s a lot more I could write on this subject, but I think I’ll defer to the graphical summary from xkcd:

Finally, even if someone wanted to influence voting intention, it’s not clear how to do so

Let’s go full conspiracy-theorist, though. What if Murdoch/GetUp somehow bought out all the rival news networks, became the sole source for polling contracts, and snapped away all the poll analysts? Couldn’t they then influence public opinion through publishing biased polling?

Well, let’s see how they’d do so by turning to published research. What effects would present themselves in response to polls showing one side ahead?

First: the underdog effect.

The polls showed the Coalition as the underdog, right? And people just hate being condescended to about how one side is going to win, right? So they all turned against Labor en masse, trying to show pollsters that they’re not as predictable as they think and this is how Murdoch is manipulating Newspoll by making it seem like Labor is ahea-

Wait, sorry, no. Completely wrong, apparently! At the three most recent state elections, the side which was ahead in the polls won by more than expected. Let me tell you how it happened.

Two: the bandwagon effect.

We evolved to side with whoever looked like they were going to win, right? So when we receive information about who’s ahead, our instincts tell us to shift towards them! This is how that former Crosby-Textor pollster the Liberals planted in Resolve is influencing the uneducated masses to shift towards the Coalition by releasing outlier polls showing them ahe-

(if you’re feeling confused right now, congratulations, you should be)

Apart from my own entertainment, my point with the above is to demonstrate how utterly confusing the morass of human psychology can be. Research has found plenty of contradictory effects (note that both links go to academic sources) and it’s not always clear why one study shows a bandwagon effect while another shows an underdog effect.

Given all of that, if you wanted to, say, influence people to vote Coalition, what should you do?

You could publish polls showing the Coalition is ahead, and risk a backlash as people move towards the underdog.

Or you could release polls showing Labor is ahead, and risk people moving towards Labor as people back the party they think will win.

Remember, pick wrongly and you risk actively harming your side. Oh, and, you don’t know if your polls are actually correct – for all you know they might be off by a few points already, making your attempts at adjustment counterproductive.

Those who fail to learn from history are doomed to repeat theories about long-slain conspiracies

I speculate that a large chunk of the reason why people still find biased-polling theories reasonable is unfamiliarity with the history of polling and poll analysis.

Like – when you hear Newspoll reported regularly and even used by a Prime Minister as cover to depose his predecessor, it might not occur to you that Newspoll holds pride of place as it has historically been one of the most accurate pollsters (federally, on the 2pp, average 1.3% error vs 1.7% error for all other pollsters).

When you see a two-party-preferred estimate published regularly with a poll, it might not occur to you that pollsters once refused to publish such estimates (leading to misleading coverage); or that the method most pollsters use nowadays to produce such estimates (last-election preferences) was only determined after a different method (asking respondents to tell you who they’d preference) proved inaccurate at several elections.

When you get a call or email asking you to participate in a survey, you might not realise that pollsters shifted to such methods once they proved better than face-to-face polling.

The problem with claiming the polls are biased because of something everyone seems to know about like landline sampling, or shy Tory factor, or sample sizes is pretty simple – these are the exact problems which poll analysts and even some pollsters of yesteryear levied against old polls! These are problems which have already been challenged and mostly dealt with, For an example of how analysts treat pollsters who don’t rectify known issues in their polling or modelling, have a look at how Dr Bonham describes Morgan polls. leaving the remains of long-resolved criticism to float about and get reforged into new conspiracy theories. It may take an election or two, but pollsters have demonstrated a willingness to adapt in the face of criticism and/or poor results (or even proactively – see Newspoll’s shift to a mixed sampling method).

This is what gives me confidence in Australian polling – the enterprise has proven to be self-correcting (you may also have heard of this description by another name: science). Even today, when pollsters do things which are known to be problematic, they are called out for it. See Dr Bonham’s comments on Essential shifting towards a respondent-allocated 2pp model or Murray Goot’s criticism of the Resolve Political Monitor’s question design for recent examples.

There is no reason to think that the people who detected that the Newspoll preference flow model had changed from assuming a 50-50 split of One Nation voters to a 60-40 split (and who regularly call out biased commissioned polling) would suddenly miss a poll with biased design.

There is no reason to think that pollsters would risk their reputation and business to produce or allow a bias in their polling; in fact they are actively incentivised against it.

There is no reason to think that published polling in Australia is biased in the first place.

The polls may or may not be wrong at the next federal election, but (with a few exceptions) there is little evidence to think anyone can guess how they’ll be wrong. The main exceptions are when a pollster does something that poll analysts already know will cause problems, e.g. Resolve providing all respondents with an “Independent” option, which tends to inflate the Independent response as many respondents may want to vote for a particular independent, but not all such respondents would vote for the same independent.

I’ve also linked to criticism of Essential for switching to a respondent-allocated preference model above.

Have a good day.