Introduction

Welcome to our first Australian election forecast! If you haven’t seen it already, check out the forecast page over here.

(if you want to skip the background and commentary, click here)

Western Australians go to the polls on 13/March/2021 to elect a new state government, and the result is considered to be all but a foregone conclusion. Thanks to a highly successful pandemic response, the incumbent Premier, Labor’s Mark McGowan, Yes, that’s the correct spelling of “Labour” in the name of the Australian Labor Party. Check it out if you don’t believe me. is incredibly popular, with upwards of 80% of surveyed voters approving of his performance. Leaked internal polling suggests Labor could win 66% of the two-party-preferred vote (2pp), For those unfamiliar with the concept:

Voting in Australia is conducted through a ranked-choice system, meaning that voters can rank the candidates on the ballot in order of which they would like to see elected. If no candidate gets more than 50% of the first-preference vote, the candidate with the fewest votes is eliminated, and their voters’ votes go to their 2nd preference instead. This process is repeated until just two candidates remain, enabling a way of estimating which candidate would likely have won if the other candidates had not been on the ballot.

(This also means that in Australia, concerns about “wasting your vote” are not as relevant; as long as you cast a valid vote, regardless of the party/candidate you put as your first preference, your vote will always end up with one of the final-two candidates)

In Australia, we have two major parties in politics, the centre-left Australian Labor Party and a formal Coalition between multiple conservative parties (often referred to as the Liberal/National Coalition, after the two major components parties).

In most electorates, thanks to the Labor and Coalition usually starting out with the most first-preference votes, the race often comes down to a Labor candidate and a Coalition candidate.

For races where this is not the case (e.g. the final two candidates is Labor vs Independent, or Coalition vs Green), most Electoral Commissions are able to go back to the ranked-choice ballots and calculate which share of the electorate placed the Labor candidate higher than the Coalition candidate or vice versa.

This statistic is known as two-party-preferred (2pp), which is a measure of what share of voters prefers Labor over the Coalition or vice versa. In the current (mostly) two-party system, it’s considered more informative than the share of first-preference votes each party received, as it allows for the calculation of other statistics (e.g. the swing between elections, or, for example, which major party is likely to win if a popular independent retired from an electorate etc).

By aggregating the 2pp figure from all electorates, we can calculate what share of all voters would have preferred Labor/the Coalition. an unprecedented share of the vote for any incumbent government. There have been roughly equal-sized two-party-preferred wins, however, most of those tend to be an opposition defeating an unpopular government. Re-election wins very rarely come with 60+% of the 2pp. The popularity of the state government is further amplified by the unpopularity of the state opposition, the WA Liberals, whose then-leader initially suggested that there was little need for border closures.

A Want of Precedent

Most, if not all modelling is based off historical data on how something is likely to behave given certain conditions. If the state government is of the same party as the federal government, what share of the 2pp are they likely to get, and what is the margin of error on that prediction? If Labor is up 51-49 in a poll, what is the standard error of such a poll given historical polling errors, and what probability of Labor winning does that imply?

While this is usually a valid approach to making predictions, it can break down when faced with a truly unprecedented event, or one which has only occurred once or twice before. At time of writing, Labor’s massive lead in the few polls available suggests a race where, for the Liberals to win, there would have to be a massive shift in the opinion of the electorate and a once-a-century polling error. However, given that we have just 30 state elections in our sample, I opted to only use state elections from 1978, which is when most branches of the breakaway Democratic Labor Party voted to re-affiliate with the Australian Labor Party. The Labor split massively damaged Labor’s chances of winning elections both state and federal (the federal Coalition spent an unprecedented 23 years in power as a result); the decline of the DLP and the rise of other minor parties such as the Democrats sets the stage for most of modern Australian politics.

(Yes, the DLP’s influence had declined long before 1978, but 1978 is a useful cutoff for other reasons mentioned below)

Furthermore, prior to the 1980s, electoral boundaries tended to favour rural areas in many states and even federally, making it harder for the Labor Party to win government and which likely produced a new source of uncertainty which today (mostly) no longer exists in current-day Australian politics.

Finally, as others have noted, polling prior to 1984 (when Newspoll was introduced) was much more of a hit-and-miss thing than it is nowadays. Furthermore, there are very few polls which can still be found from that era of polling, most of which tend to be cited to make claims of performance over another pollster (e.g. Morgan citing Nielsen polls to show how theirs are better). I’ve opted not to include those polls in the dataset as they will almost certainly artificially skew accuracy estimates to the pollsters who’ve written such puff pieces. with the largest recorded polling error being 4.8%, it may be hard to determine whether the probability of such an error is, say, 1% or 0.5%. With very few blowout elections of this magnitude (just three in recent history come to mind – 1993 SA, 2011 NSW and 2012 QLD), we may not be able to model polling error in such an environment as effectively.

This is not helped by the fact that (at time of writing), a month out from the WA state election, there still hasn’t been a single statewide voting intention poll of WA published for two whole years. No, it’s not a lack of resources. Leaving aside state-specific pollsters (EMRS), our pollsters have decided to poll states whose elections aren’t due for a year or two such as Victoria.

There have been statewide polls of Western Australia, but they’re mostly things like Premier approval or secession. Either no one has happened to ask voting intention questions with all these polls, or they’re unwilling/afraid to publish whatever results they got. This means that any model of the WA state election will have to rely on less accurate measures of voting intention such as the fundamentals (e.g. federal drag) or non-voting-intention polls such as Better Premier/Premier approval polls unless a statewide voting intention poll comes out before the election.

So, my advice? Don’t take small shifts in our model too seriously; when you have a standard error of ± 4.7% on the 2pp (margin of error, ± 8.6%), I use a Gosset’s t-distribution (more commonly known as Student’s t) to model the 2pp vote, to account for the possibility of a large error.

This is not just a choice to account for the lack of polling; even if I had voting intention polls, I would still use a t-distribution (though with higher degrees of freedom, or in plainspeak, with more certainty). I plan on writing a piece about this in the future, but when we have highly correlated polling errors similar to those seen in recent elections, the normal distribution (which assumes that the inputs are independent) is not always appropriate. whether Labor is at a 98% or a 99% chance of majority isn’t a big deal. At the same time, be skeptical of people claiming that the Liberals have some path back to victory by referring to any of the following (polling error in brackets): Polling error calculated by taking the winner’s share of the two-party vote in final pre-election polling and comparing it to the winner’s share of the two-party vote in the election result. So for example, if the final polling average said 50-46-4, while the election result was 48-46-6, the two-party vote would be 52.2% and 51.1% respectively, or an error of 1.1%. This allows for a better comparison with the 2pp used in Australia.

- Trump’s win in the 2016 US Presidential election (1.1%)

- The Leave campaign’s victory in the Brexit referendum (2%)

- The closeness of Biden’s win in the 2020 US Presidential election (2.2%)

- The Coalition’s victory in the 2019 Australian federal election (3%)

For reference, at time of writing, our current forecast puts Labor ahead nearly 60-40. In other words, for the Liberals to win, there would have to be a polling error more than three times the 2019 upset (I wasn’t joking when I said “once-a-century”). Even a whole month out, the largest deviation from the polling average and the final result is 5.5%, meaning that there would have to be an error nearly twice as big as the previous largest error for the Liberals to win. (and even then, that poll was a massive outlier as other polls of the same timeframe were off by just 1 – 3%). Oh, it’s definitely possible. But it’s very, very unlikely.

(If you like our models and analyses, please consider supporting us on Patreon.)

How our model works

Step 1: Collect historical polling data and election results

One simply does not model without a theory and some data to work off. Massive thanks to fellow psephologists Dr Kevin Bonham and Ben Raue for their polling data and historical election result data respectively – they saved me weeks of research trawling for these datasets (and are both very informative psephs in their own right).

Historical polling data and past election results are both very important, as they allow us to find patterns in historical polling errors and local results (we’ll be talking about one of those in another piece), helping us to find good predictors of the election result. This allows us to consider what factors should be included in a model. For example, let’s say we had 10 polls from a pollster, 8 of which the polling error favoured Labor and 2 of which the polling error favoured the Liberals. Should we model this pollster as being skewed to Labor? What about if during those 8 polling errors to Labor, a Labor government was in power?

Extensive datasets give us the facts we need to make decisions about how we model elections.

Part I: Modelling the vote

Step 2: Analyse the historical data, and build a model of the vote

Our vote model is designed to take multiple inputs and combine them to forecast the primary and 2pp vote. Listing our process for each input below:

- Voting intention polls – i.e. polls directly asking who a respondent intends to vote for in the upcoming state election. Usually the best predictors of the election-day result.

- We first developed priors for the model using the polling error of all final, pre-election polls which were conducted in states who use a single-winner electoral system. e.g. what is the average skew towards incumbents, what is the average deviation from the final result etc. Only the last poll from each pollster was used, and only polls with a short field period (<= 7 days) whose end-dates were within a week of the election were included for this calculation.

- These priors were then updated with data from the particular subset of characteristics we’re attempting to model, using a Bayesian updating process. For example, if I wanted to model the accuracy and skew of Newspolls conducted in Western Australia, I would update the priors using first all polling errors in Western Australia, then all Newspoll polling errors in Western Australia. This allows us to develop a posterior predictive function (read: a model which predicts how polling errors are likely to look like in the future) of polling error.

- We then calculated the covariance of any two random polls of the same election, for an estimate of how highly correlated voting intention polls are. We would have preferred to calculate pollster-specific covariance (are some pollsters more likely to make similar errors than others?), but there really aren’t enough polls in the sample to calculate such data without it being noisier than the House of Reps when someone makes a joke.

- Premier approval/Better Premier polls – polls asking respondents whether they approve of the job their Premier is doing, or whether they prefer their current Premier or the Opposition Leader be in charge. They’re less accurate than voting-intention polls, but can still be useful in polling-scarce elections, and can be less susceptible to correlated polling errors than voting intention polls.

- The first thing to note is that both Premier approval and Better Premier scores tend to be skewed towards the incumbent. On average, the incumbent Premier is at approximately +16% on both measures, with some Premiers (VIC 1992) even recording positive Better Premier scores even as they ended up losing by quite significant margins.

- Hence, all scores in the dataset were adjusted for the incumbency skew by subtracting the average Better Premier score from the incumbent’s rating.

- We then developed a logistic regression to estimate 2pp from a Better Premier score. I opted for a logistic regression over a linear regression because 1) there appeared to be some tapering off towards the right-tail of the dataset (see graph below), and 2) it makes theoretical sense – Premiers (and for that matter PMs) with sky-high approval ratings very rarely win by the same margin as their net ratings.

Scatterplot of incumbent Premiers’ leads in Better Premier polling vs the 2pp result their party got. Positive values indicate that the incumbent/their party was ahead while negative values indicate that the incumbent/their party was behind.

This choice doesn’t make much difference in the forecasted 2pp for Premiers who are polling roughly in the middle of the dataset (+10 to +30), but it does mean that we expect that a Premier who goes from, say, +60 to +70 will gain less in 2pp than one who goes from +15 to +25. - The deviation from the actual 2pp (or regression residual) was then calculated for each value. We then used the same updating process as described above in our voting-intention model to produce a function which can be used to predict the error of our Better Premier-based regression.

- Similarly, we also calculated the covariance between Better Premier regressions for the same election where available, so as to account for correlated errors.

- In addition, we also calculated the covariance between the Better Premier regression and an average of final voting intention polls, to allow us to model how expected accuracy changes when including the Better Premier regression in our vote model (as well as considering what the best weight for the Better Premier regression would be in our vote model, when voting intention polls are available).

- Note for the statistically inclined: This is the reason why we decided to include Better Premier in our vote model when we have multiple polls. While Better Premier is definitely less accurate in predicting 2pp than voting-intention polling, the covariance between Better Premier and individual voting-intention polls is less than the covariance between voting-intention polls (0.8% vs 1.8%). Hence, once we have more than about three voting-intention polls, it can actually be useful to include the Better Premier retrodicted 2pp in the model.

The variance of voting-intention polling error is about 2.25, while the variance of Better Premier regression residuals is about 7.84.

The formula for variance of a sum of multiple weighted variables is given by:

Var(aX + bY + cZ) = a2 Var(X) + b2 Var(Y) + c2 Var(Z) + 2ab Cov(X,Y) + 2ac Cov(X,Z) + 2bc Cov(Y,Z)

For a simple average of three polls, given a = b = c = 1/3, Var(X) = Var(Y) = Var(Z) = 2.25, and Cov(X,Y) = Cov(X,Z) = Cov(Y,Z) = 1.8,

Variance of the polling average = 3 ((1/3)2 × 2.25) + 3 (2 × (1/3) × (1/3) × 1.8) = 1.95

If we include a Better Premier regression-based 2pp prediction in the mix, the variance changes to:

Var(aW + bX + cY + dZ) = a2 Var(W) + b2 Var(X) + c2 Var(Y) + d2 Var(Z) + 2ab Cov(W,X) + 2ac Cov(W,Y) + 2ad Cov(W,Z) + 2bc Cov(X,Y) + 2bd Cov(X,Z) + 2cd Cov(Y,Z)

Given a = b = c = d = 1/4,

Var(W) = Var(X) = Var(Y) = 2.25,

Cov(W,X) = Cov(W,Y) = Cov(X,Y) = 1.8,

Cov(W,Z) = Cov(X,Z) = Cov(Y,Z) = 0.8,

Variance of the polling + Better Premier average = 3 ((1/4)2 × 2.25) + ((1/4)2 × 7.84) + 6 × ((1/4)2 × 1.8) + 6 × ((1/4)2 × 0.8) = 1.89

It’s a small gap which can easily be swamped by changes in polling accuracy, but it does grow bigger with more voting-intention polls.

- Note for the statistically inclined: This is the reason why we decided to include Better Premier in our vote model when we have multiple polls. While Better Premier is definitely less accurate in predicting 2pp than voting-intention polling, the covariance between Better Premier and individual voting-intention polls is less than the covariance between voting-intention polls (0.8% vs 1.8%). Hence, once we have more than about three voting-intention polls, it can actually be useful to include the Better Premier retrodicted 2pp in the model.

- Fundamentals – i.e. the background factors which influence voters’ decisions and which can also be used to predict election outcomes.

- If you’ve read the methodologies of US election forecasters like FiveThirtyEight’s Nate Silver or The Economist’s Andrew Gelman and Merlin Heidemanns, you’ll know that in national elections, factors such as the economy can be fairly good predictors of the popular vote, as many voters will eventually make up their mind based on such issues. These variables also tend to be leading indicators, meaning that more often than not, the popular vote as measured by polls will tend to move closer to a fundamentals-based prediction as election day approaches.

2pp vote share for incumbent Queensland state government in 2020, polling average versus a simple federal drag-based model. Note that not all state elections will look like this; voting-intention polling usually outperforms fundamental models by election day (but fundamentals tend to be better further out) - We’ve examined quite a few commonly-used variables in fundamentals elsewhere; however many don’t seem to have much correlation to an incumbent state government’s share of the 2pp. Factors such as unemployment, change in GDP growth etc tend to correlate much more strongly with the federal government’s performance, while there have not been many instances of exceptional events (e.g. wars, pandemics) to have any confidence in their effects on voters’ decisions.

- There is, however, one variable which strongly correlates with 2pp: federal drag. This is the tendency of state governments of the same party as the federal government to do worse than state governments of a different party from the federal government.

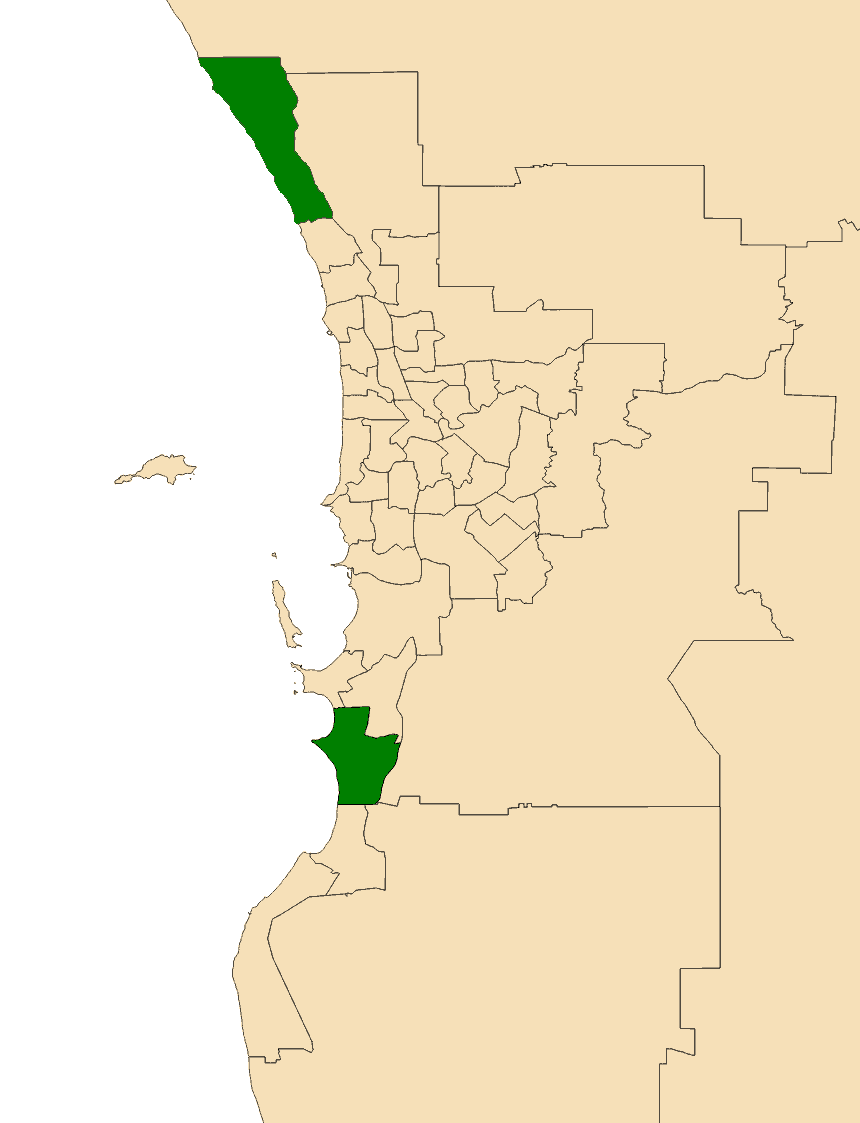

Boxplot of state governments’ 2pp margin (i.e. (50% – state government’s re-election 2pp)) for when the state government is of the same party as the federal government, and when they’re of different parties.

(this is not an original observation of mine – I’m grateful to both Antony Green and Dr Kevin Bonham for their prior work on this effect) - As the above image demonstrates, there is a lot of variance in state governments’ 2pp results even when accounting for federal drag. Hence, by election day, a federal-drag based model will underperform voting-intention polling. However, further out from an election, when voters have yet to tune into the campaign, blending the fundamentals-based prediction with voting-intention polling can produce a more accurate prediction than either alone.

- We weight our model such that the fundamentals receive more weight further out from an election, while closer to an election (where polls are more accurate), the polls are given more weight. However, if there are no voting-intention polls and we have to rely on Better Premier/Premier approval polling, the fundamentals model will get more weight.

For those who are interested, the curve used here is a Gompertz function. - I should note that the purple curve above (giving the fundamentals more weight) actually outperforms the black curve in backtesting on past WA state elections. The reason I’ve opted to use the black curve instead (reducing the fundamentals’ weight to nearly 0 by election day) whenever voting-intention polling becomes available is because:

- The improvement isn’t that large. It’s about 0.8%, on average, when testing polls taken within the last month of the election.

- The sample size here is very small (just 9 WA state elections) – it’s fairly plausible that the improvement is just an artifact of noisy data and a small sample size.

- While most recent state elections conform to the pattern of federal drag, in most such elections, increasing the weight of the federal drag model would have made the 2pp forecast worse, not better. Of the elections held during the last decade, just 4 of 15 would have been improved by increasing the fundamentals’ weight in our forecast (2013 WA, 2015 NSW/QLD, 2020 QLD). Hence, it might be the case that the improvement is due to some issue with older polls (respondent-allocated preferences, maybe?) which has been resolved.

- From a theoretical standpoint, it doesn’t make much sense to continue to give the fundamentals this much weight when we have voting-intention polls. They may not be perfect, but voting-intention polls directly ask voters who they intend to vote for, as opposed to attempting to infer the answer to that question based on historical patterns.

- Nevertheless, I will be analysing the difference in forecasted 2pp with the differing weights given to the federal drag model when the results come in. (That doesn’t necessarily mean that I will discard the model with less weight assigned to federal drag if it does worse on election day)

- If you’ve read the methodologies of US election forecasters like FiveThirtyEight’s Nate Silver or The Economist’s Andrew Gelman and Merlin Heidemanns, you’ll know that in national elections, factors such as the economy can be fairly good predictors of the popular vote, as many voters will eventually make up their mind based on such issues. These variables also tend to be leading indicators, meaning that more often than not, the popular vote as measured by polls will tend to move closer to a fundamentals-based prediction as election day approaches.

Step 3: Blend the three approaches above to produce a forecast of the vote

This part is relatively simple – I first assign the fundamentals whatever weight they should get given the number of days to election, then divide the remaining weight between voting-intention polls and the Better Premier 2pp estimator model.

The Better Premier-based 2pp estimate is only included when there are either no voting-intention polls, or more than three voting-intention polls. When it is included, the average Better Premier 2pp estimate is weighted the same as a single voting-intention poll.

When there is more than one voting-intention poll, they are weighted by sample size. More specifically, the weight assigned to each poll is , where n refers to the sample size of the poll, and nmean is the mean sample size of all polls. I will likely revise this formula if state-wide polls emerge with more than 2x the sample size of other polls (however given recent history I doubt this will happen).

Step 4: Account for uncertainty related to days out from the election

Firstly, we measure how the average deviation from the final result changes further out from an election (i.e. |voting intention for party X| – |election result for party X|).

Then, for each average deviation figure, we subtract the average deviation in the last week of polling from each (e.g. for the average deviation of polls taken between 8 – 14 days from an election, the difference would be (average deviation of polls taken 8 – 14 days before an election) – (average deviation of polls taken 0 – 7 days before an election)). The result looks something like this:

We then fit functions to the data using least-squares regression; the fitted functions are then used in our forecast to increase uncertainty (as measured by the scale parameters in our probability distributions) the further out from an election we are. This is also used to increase uncertainty for polls which were taken more than a week ago (in the event that no other polls are available).

Step 5: Building a probability distribution of the vote

From all of the work above, we can develop probability distributions of the vote (though usually most of the work in doing so is complete by this stage). These are the bell curves you may be familiar with in other forecasts.

Two important things of note are produced by the vote models:

- The expected value – i.e. what the average prediction of the vote for each party is.

- The uncertainty around that expected value, usually expressed as the variance.

Both are used in the development of our vote probability distributions. For parties with more than 25% of the primary vote, (i.e. Labor and the Liberals), we opted to use a Gosset’s t-distribution (more commonly known as a Student t-distribution) with 4 degrees of freedom, to better account for the possibility of a massive polling error. This decision doesn’t make much difference in a close election (e.g. where one side is leading by 52-48), but it does mean that if one side starts reaching landslide leads (e.g. 55-45 or more), their probability of winning won’t increase as rapidly as they would in a normal distribution.

For parties with less than 25% of the vote, we use a log-normal distribution to account for the asymmetry of swings for such parties. For example, if we have a party polling at 5%, while it is possible (if unlikely) that there might be a big polling error in their favour and they win 15% of the vote, it is not possible that they experience a 10% swing against them and end up with a negative share of the vote (well, not under our current electoral system anyway).

The reason I opted not to use a log-normal distribution for the two major parties is because historically, polling errors for the two major parties (Labor and Liberals) tend to be symmetric, showing little of the skew which one might expect in a log-normal distribution. However, I will note that, at time of writing, the forecast for the Liberals’ primary vote is low enough, and (due to the fact that there currently isn’t any polling) the distribution is so wide that an asymmetric distribution might become appropriate; I will update this page if that becomes the case.

Update (18/Feb): Despite the fact that we got polling, the polls suggest a lower Liberal primary vote than our priors suggested. While I’m not entirely confident in the leaked polls we have (the minimal information available means I’m not sure about e.g. undecided voters, whether they accounted for whether or not a party was running in every electorate etc), I’ve added them into the model anyway, as we don’t have any other sources of info (with some reduction of weight and certainty as compared to a regular poll).

As a result, there are indeed seats where the Liberal vote is starting to get too close to 0 for comfort. After much experimentation, I’ve opted to switch the Liberal vote model to a log-t distribution with 6 degrees of freedom, which should maintain the same level of uncertainty while minimising the chance that the Liberal vote reaches 0 in some seats.

Part II: Translating a vote prediction into a seat prediction

Step 6: Estimating the leanings of each electorate

This is where we go back to step 1 and use our historical elections dataset to estimate how each electorate votes, relative to the state at large. We do this for every major party and every minor party which has been split out in statewide polling (so Labor, Liberals, Nationals, Greens and One Nation). If there are electorates where e.g. One Nation did not contest at the last election, we use a demographic regression to predict how the electorate will vote relative to the state.

The lean figure is calculated by redistributing the electorates from the last two elections (in this case, that would be the data from the 2013 and 2017 WA state elections) to the current boundaries, and then subtracting from it the average primary vote in contested electorates for that party in each election.

For example, for the electorate of Southern River:

Labor’s estimated primary vote, 2013: 34%

Labor’s estimated primary vote, 2017: 49.7%

Southern River’s estimated Labor lean, 2013: 34% – 33.1% = +0.9%

Southern River’s estimated Labor lean, 2017: 49.7% – 42.2% = +7.5%

We then calculate a Labor lean estimate for 2021 by using a 2:1 weight for the most recent election vs the previous election: This is the ratio which works best empirically without having to resort to (likely) overfitted ratios like 2.12:1. It also holds up well when cross-testing against other recent state elections (e.g. QLD).

Southern River’s Labor lean estimate: (0.9% + 2 × 7.5%) / 3 = 5.3%

This figure is then added onto the statewide vote prediction for that party. For example, if Labor is expected to win 35% of the vote, then our expected value (or, roughly speaking, the “middle” of our forecast) for Labor in Southern River would be 40.3%.

Step 7: Estimating the elasticity of each electorate

In addition to estimating the lean of each electorate, we also estimate how elastic, or how swingy it is. Elastic electorates will tend to move more in response to a statewide swing – for example, in a statewide swing of 2% to the Liberals, an elastic electorate might swing 2.4% to the Liberals, while an inelastic electorate might only swing 1.6% to the Liberals. For example, our estimates have Geraldton as one of the most elastic electorates (elasticity = 1.2) while Rockingham is one of the least elastic electorates (elasticity = 0.9); the 2pp swings to the Liberals for both districts are shown below:

| Election | Statewide swing | Geraldton (elasticity = 1.2) | Rockingham (elasticity = 0.9) |

|---|---|---|---|

| 2008 | +4.1 | +5.0 | +0.7 |

| 2013 | +5.4 | +14.3 | -1.8 |

| 2017 | -12.8 | -21.5 | -10.2 |

We estimate elasticity by first running a linear regression for the vote in the region contained by each polling-place, with the statewide vote being the explanatory variable and the vote at that polling-place being the dependent variable. The regression will output two values, the slope of the fitted line and the intercept. As the slope tells us how much we expect the vote in this polling-place to change in response to a statewide change, we use that for our elasticity estimate.

To avoid overfitting to noise in the data, we weight the fitted slope based on how well it fits the data, as measured by the p-value of the slope (basically the probability we would get a slope this extreme with this amount of error under the null hypothesis – I’ll explain what that is in a bit); with the weight assigned to the fitted slope = (1 – p).

The remaining weight is assigned to the null hypothesis, which is basically what we would expect if our model had no statistical significance. As we’re attempting to model differences in elasticity between electorates, the null hypothesis would be that there is no difference in elasticity between electorates. If that is the case, then the best way to model how a national swing translates to electorate swings would be uniform swing, which is basically elasticity = 1. Hence, e.g. for a polling-place where the linear-regression outputs a fitted slope of 1.7755, p-value = 0.0136, the elasticity estimate at this stage would be:

Elasticity estimate = 1.7755 × (1 – 0.0136) + 1 × 0.0136 = 1.764953

Afterwards, to further reduce the possibility of overfitting, we weight each elasticity estimate towards 1 based on how many data-points were available for the fitting of each linear regression. Backtesting on historical state elections suggests that a weight of 10 elections for the null hypothesis (elasticity = 1) works best; so if the above example was based on 3 data points, the estimated elasticity would be:

Elasticity estimate = ((1.764953 × 3) + (1 × 10))/(10 + 3) = 1.176528

The above calculations are only performed for polling-places where we have three or more elections’ worth of data to work with; if we don’t then we simply assign them an elasticity of 1 for further calculation (i.e. uniform swing).

To calculate the elasticity estimate for each electorate, we then weight each polling-place’s elasticity estimate by how many votes were cast there at the last election and calculate a weighted average.

For example: if we had an electorate with 2 polling-places, A and B, at which 7000 and 3000 votes were cast respectively, and whose elasticity estimates were 1.1 and 0.8 respectively, the elasticity estimate for that electorate would be:

Electorate elasticity estimate = ((7000 × 1.1) + (3000 × 0.8))/(7000 + 3000) = 1.01

This approach has its advantages and drawbacks. It directly measures how much an electorate swings in response to statewide swings instead of trying to model elasticity by assuming it’s driven by some other factor (FiveThirtyEight’s elasticity estimates seem to be based on demographic data), and it can be computed with the data on hand. However, it’s subject to being a lagging statistic, in that rapid population change can massively alter the elasticity of a polling place, which our model cannot pick up. Nevertheless, the elasticity estimates generally perform well in backtesting, and for most electorates, it doesn’t change the estimated swing by much (as compared to a uniform swing model).

Step 8: Estimating the vote for micro-parties and independents

Using historical data, we’ve found that, excluding exceptional circumstances, These include but are not limited to: when a major party doesn’t run in that electorate (Labor/LibNat), when a micro-party/independent incumbent MP is running or when an incumbent MP who was elected as a member of a different party switches party or recontests as an independent. micro-party and independent candidates tend to draw from a limited “pool” of micro-party voters. In other words, increasing the number of micro-party and independent candidates in an electorate tends to reduce the average vote share per micro-party/independent candidate, rather than increase it (as would be the case if each micro-party/independent had their own “base” which would only vote for them and not any other micro-parties).

(note that this is a general description; there are obviously plenty of counter-examples)

Hence, to estimate the vote for micro-parties and independents, we first take all the micro-parties and independents who are running again in an electorate and adjust their vote share from the last election to account for any changes in the total number of micro-parties/independents running. For new micro-parties and independents, we then assign them the projected micro-party/independent vote for a candidate running in an electorate with the same number of micro-party/independent candidates.

If a micro-party is no longer running in a certain electorate, we redistribute their votes as they were distributed in the instant-runoff process at the last election. With just two exceptions: we use a demographic regression to estimate the votes for conservative Christian minor parties (Australian Christians, Christian Democratic Party, Family First) and the Shooters,Fishers,Farmers party.

For the former, we have enough election data to perform said regression (variants of such parties have been running since 2001), and for the latter, I felt it was fairly self-evident that a micro-party named “Shooters,Fishers,Farmers” would likely have some demographic correlation.

Neither makes much of a difference though; minor parties have had very little success in the WA Legislative Assembly (as opposed to NSW/Vic/QLD, where minor parties have won some electorates).

Step 9: Adjusting for candidate effects

Many candidates often have a “personal vote”, or a certain segment of the electorate who would vote for them but not for any other candidate of their party. Personal vote effects tend to be tied to electoral experience in that electorate (e.g. a popular mayor of a city running for the electorate which that city is in), but can also be linked to name recognition (celebrity candidates and/or well-known leaders of minor parties e.g. Clive Palmer and Pauline Hanson).

We group candidates into the following categories:

- Major party incumbents: incumbent MPs from either the Labor, Liberal or National parties. These MPs tend to get a small boost in their vote, usually worth about 2% as compared to a generic candidate from their party.

- Minor party incumbents: incumbent MPs from any party other than Labor/Liberal/National (this includes independent MPs). Generally speaking, minor party incumbents have a much larger personal vote than major party incumbents, winning about 10 – 20% more of the primary vote than a generic candidate from their party/independent would.

- Party-switcher incumbents: incumbent MPs who have switched party over the course of their term, and are now re-contesting their seat as a member of a different party or as an independent. Most such MPs tend to be major-to-minor or major-to-independent switchers; there have been very few major-to-major switchers (which makes modelling them harder e.g. Ian Blayney in Geraldton).

Generally, both major-to-minor switchers and minor-to-minor switchers tend to win 5 – 15% more of the vote than they would if they were just a generic member of their new party; however I will note that the minor-to-minor switcher data is overwhelmingly One Nation defectors (who proceeded to lose), so I’m a little less confident in it (it’s not an issue at this particular election though).

Given how few major-to-major switchers there are, for this election, I’ve opted to split the difference between Type 1 and Type 3 for Geraldton’s Ian Blayney and project that the Nationals would gain 6% of the vote there from the Liberals (relative to 2017), though this is subject to change with new data (e.g. seat polling of Geraldton). - Prior electoral experience in the area: these are candidates who have previously represented some part of the electorate at a different level of government – e.g. local councilors, mayors. This tends to be a fairly common route for independents to enter state parliament; see Sandy Bolton in Noosa (QLD) or Greg Piper in Lake Macquarie (NSW) for examples.

This factor usually isn’t worth much for major parties (just 1 – 2%), but can be worth quite a bit for independents and minor parties – the average councilor/mayor who runs for state parliament in WA wins about 16% of the vote, a large jump from the 4% averaged by other independents. - Celebrity candidates: candidates who have a degree of name recognition who don’t have prior electoral experience. Although I’ve split this out as its own category, I don’t believe I have enough data to be certain as to how well such candidates perform relative to other, generic candidates (especially at the state level). Since there aren’t many of such candidates at the state level (and no particularly well-known candidates in this state election – i.e. no Pauline Hanson or Clive Palmer), I’ve opted not to model this candidate category for Meridiem 2021.

We adjust the projected primary votes for each party in each electorate based on whether their candidate falls into one of the above categories. Note that these adjustments are only applied if there is some change in the party candidate’s status from/at the last election . For example, we wouldn’t add 2% to Mark McGowan’s primary vote projection as he was already the Labor incumbent MP for Rockingham at the last state election; any incumbency bonus should already be reflected in his primary vote from the last election.

Step 10: Quality control

This is a relatively simple step, where we check to ensure that there are no negative primary vote shares (if we find any, we usually assign them the rounded-down lowest primary vote share ever recorded for that party [if Labor/Liberal/National/Green/One Nation] or for micro-parties/independents [if Others]).

We also redistribute vote shares for electorates where the primary-vote shares do not total to 100, by dividing all projected vote shares by the total vote (so for example, if Labor was projected to win 40% of the vote, but the primary vote of all parties total to 120%, Labor’s vote projection would be changed to 33.3%).

It’s not an ideal way of redistributing primary votes, but it usually doesn’t make much difference (most vote share totals fall between 96% and 104%) and works fairly well.

Part III: Simulating the election

With all of the groundwork out of the way, we run multiple (50 000) simulations of the state election using our model to produce our probabilistic forecast of the results.

Step 11: Simulating the statewide first preference vote

Using the models we developed in Step 5, we randomly generate statewide polling errors to model how the election could turn out given the information we have on hand.

Usually, when we first run the model, we also do a quality check similar to step 10 – checking, for example, that there are no negative vote shares. We also use distribution-fitting tools (fitDist() in R) on a sample of our randomly-generated errors to check that the distribution we produced in step 5 is correctly being used to generate polling errors.

Step 12: Simulating minor party voters’ preference flows

Since we live in a society with a preferential voting system, first-preference votes don’t determine the outcomes of elections on their own. It’s also important to simulate the possibility of a shift in preferencing behaviour by minor-party voters; a recent example is One Nation voters preferencing the LNP ahead of Labor 67% of the time at the 2019 federal election, which was up from just 54% in 2016.

To do so, we use recent state and federal elections to model minor party voters’ preferencing behaviour. We give additional weight to the preference flows most recent state election in WA (2017), then fit a beta distribution (a distribution which can only go from 0 to 1) to the preference flows for the Greens, One Nation, and Others:

For each random simulation, we then use these probability distributions to randomly generate a preference flow for each of these three categories. These preference flows are then used in classic (Labor vs Liberal/National) I am aware that in WA, the National Party does not maintain a formal Coalition with the Liberals like they do in other states (which is one of the reasons I model them separately on my forecast page). However, to my knowledge, the Western Australian Electoral Commission (WAEC) only calculates a preference flow between Labor and either the Liberals or the Nationals for their two-party-preferred calculation.

Hence, I don’t have much data on how a Labor vs Liberal preference flow would differ, if at all, from a Labor vs Liberal/National preference flow. contests as well as to calculate a 2pp estimate for each electorate.

Step 13: Generating correlated deviations from statewide swing

Usually, when there’s a swing to one party or another, the swing is very rarely anything close to evenly-distributed; there will always be electorates where the party does really well and others where their vote hardly budges. Apart from each electorate’s elasticity, another factor that can explain deviations from uniform swing are correlations among similar electorates. For example, if the government were to implement a policy popular with farmers, agricultural electorates like Roe and Central Wheatbelt are likely to swing to the government, or at least not swing against the government as much.

One way of measuring how similar electorates are is by analysing their demographics; electorates with similar demographic profiles will likely contain voters with similar priorities, issues and political leanings.

To measure how relevant a particular set of demographics is, we performed a regression of each category of demographics (e.g. age, education) from the Census versus the vote for each party (Labor, Liberal/National, Green, One Nation) in past electorates. Doing so gets us the following order of importance:

- Occupation type, e.g. Managers, Professionals, Labourers etc

- Unemployment rate (more relevant for 2pp than primary vote but still very important)

- Housing type – e.g. owned, renting, social housing etc.

- Industry (in combination with occupation type) – e.g. agricultural, mining, education etc

- Age range. I used the service age ranges from the Census.

We then weighted each category by how well it explained the vote and deviation from statewide swing in past WA state elections Although we’re trying to model correlated deviation from statewide swing here, I felt that a demographic which explained a large chunk of the variation in the vote should be upweighted even if it historically didn’t explain a large chunk of the variation in swing deviation.

My reasoning is that if something is correlated with vote share, it’s very plausible that it can change in some way in future elections, which would produce a correlated deviation from statewide swing which hasn’t occurred before. (more specifically, the adjusted R2 of each regression) in calculating our similarity matrices (basically a table of numbers telling you how similar each district is, and what that means in terms of generating a correlated swing).

Correlated deviations were then generated by randomly producing a “multiplier” for each demographic using a normal distribution fitted onto our regressions. For example, in a simulation where mortgaged home owners swung to the Liberals (relative to the rest of the state), we might produce a multiplier of 1.2, which tells us that for every 1% increase in mortgaged home owners in an electorate we should expect a deviation from uniform swing of +1.2% in the Liberals’ favour.

We then apply a correction to ensure that the correlated deviation generator has not altered the statewide vote share generated in Step 11. The purpose of the correlated deviation generator is to simulate where parties do better or worse relative to their statewide vote share, not simulate their vote share (that’s the job of the statewide vote simulator).

The correlated deviation generator is also activated if we receive seat polling; it can use them to update the vote predictions of similar electorates. This can help us determine if the electoral map might help or hurt a particular party on election day.

Historically, we find that the average electorate tends to deviate from uniform swing by about 5% and a demographic regression can usually explain about 1 – 3 points of said deviation. For the purposes of our model, we randomly vary the amount that demographics “explain” every simulation, allowing for the possibility of completely uncorrelated deviation from uniform swing.

Step 14: Generating random, uncorrelated deviations from uniform swing

In addition to correlated deviations from statewide swing, there’s also deviations from statewide swing that aren’t easily explainable by demographics.

To simulate these, we first randomly generate an “average deviation from statewide swing” for each simulation. Some state elections (like 2017) see bigger deviations from statewide swing than others, though the average deviation has historically been between 4.5% and 5.4%.

We then calculate the size of the random deviations necessary to “make up the difference” between the simulated average deviation and the correlated deviation in Step 13. For example, if our simulated average deviation figure is 5%, and correlated deviation is supposed to explain 2 points of that, then the necessary amount of random deviation to “make up the difference” would be about 4.6%.

For Labor, the Liberals and the Nationals, we then generate the random deviations using a t9 distribution while a log-normal distribution is used for the other parties. Historically, electorate deviations from state 2pp swing follows a t11 distribution. Using t9 for the major parties helps to produce this effect in our electorate 2pp simulations.

Update (18/Feb): Due to the inclusion of new polling, the Liberal vote is at a point ( < 20%) in many seats which should produce some skew in the results. (read: the Liberal vote is dropping to the point where the distribution I normally reserve for minor parties has become appropriate in some electorates). Additionally, I missed the fact that there are some electorates where the National vote tends to be at very low levels; I’ve fixed both issues by switching the Liberals and Nationals’ random deviation distribution to a log-normal distribution.

Step 15: Quality control, simulations

We basically repeat whatever we do in Step 10 with each simulation to ensure that we don’t get any impossible outcomes like negative vote shares and/or vote shares not adding to 100%.

Step 16: Simulating final two candidates

For each electorate, we attempt to simulate which two candidates will make it into the final-two count.

Firstly, if any candidate has more than 33.3% of the first-preference vote (>=33.4%), they’re included in the final-two count. When a candidate has more than a third of the vote to begin with, it’s not possible for one of the other candidates to knock them into third place.

Next, if there are still positions to be filled, we simulate the probability of getting into the final-two for each remaining candidate. We do this using a logistic regression, with three explanatory variables (in order of importance):

- Whether the candidate’s primary vote share is in the top two for that electorate (dummy variable, 0 or 1)

- The candidate’s primary vote share

- The candidate’s primary vote share, minus the second-largest primary vote share in that electorate

We then use these probabilities to randomly select candidates for the final-two count; allowing us to simulate the possibility of various matchups (e.g. the probability of a Labor vs Liberal contest in Albany instead of Labor vs National).

Step 17: Simulating classic contests

“Classic” contests is an Australian Electoral Commission (AEC) term which refers to a contest which comes down to the Labor candidate and a Coalition (Liberal/National) candidate. These are fairly simple – we just use the primary votes and the preference flows we generated in Step 12 to produce a 2-party-preferred estimate in each electorate and determine the winner from that.

Step 18: Simulating non-classic contests

Contests that aren’t between Labor and a Liberal/National candidate are more difficult to simulate. For these, we attempt to simulate preference flows to the final two candidates using historical distributions of preferences between similar candidates (e.g. looking at Roe to estimate how Labor voters’ preferences split in a Liberal vs National contest). We then determine the winner of that electorate in that simulation using the two-candidate-preferred estimate.

Finally, we collect all these simulations to build our seat distributions over at the forecast page.

Go to the Western Australia 2021 forecast >>

Download a copy of the latest simulations from Meridiem here.